A Tracking Tool For Exhibition Designers

Client

- University of Konstanz (Bachelor’s Thesis)

Awards

- Best Paper Award (Mensch und Computer 2018)

Year

- 2015

Tasks

- Analysis

- Concept

- Implementation

- Evaluation

- Publication

Can exhibition designers create interactive installations without coding?

In my bachelor’s thesis, I designed Argus Vision, a DIY Tool, which allows exhibition designers the use of camera-tracking to rapidly prototype and develop immersive exhibitions and interactive installations. To create such exhibitions, exhibition design companies normally employ professionals from a wide range of different disciplines, making it difficult for smaller companies to achieve similar results. Therefore, developing DIY Tools that enable designers from all disciplines involved to create interactive installations themselves is one goal in Human-Computer Interaction research.

I successfully used Argus Vision in an exhibition to test its feasibility and additionally conducted interviews with exhibition designers of some of Germany’s leading exhibition design companies, investigating its usefulness for them.

The video shows the functions of Argus Vision.

Results

This project had many rewarding outcomes, ranging from the development of Argus Vision itself, its successful use in an exhibition over to the interviews with the exhibition designers. Finally, I was able to publish excerpts of this thesis and won the best paper award for that publication at the Mensch und Computer 2018 conference in Dresden, Germany.

Argus Vision

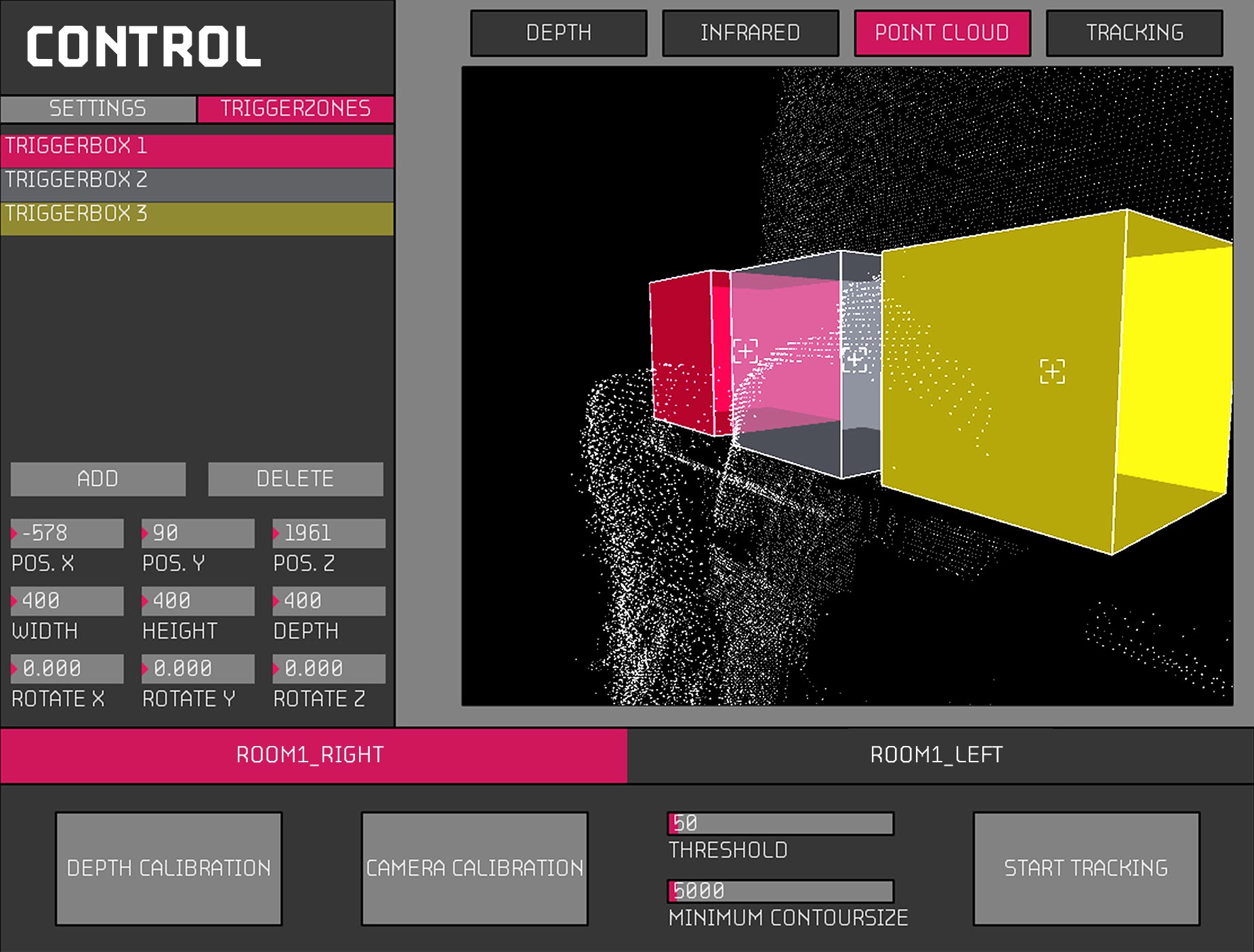

Argus Vision is a middleware that hides the challenging implementation of a tracking system behind an easy-to-use UI and simplifies the results of the tracking algorithm. Argus Vision then sends this data to other applications, such as media control systems like Resolume and MadMapper or game engines like Unity, allowing exhibition designers to create interactive installations without coding.

”If exhibition designers knew what they could do with it, [Argus Vision] enables sensational possibilities.

Sebastian OschatzCEO, MESO Digital Interiors

Deployment in the Exhibition Tell Genderes

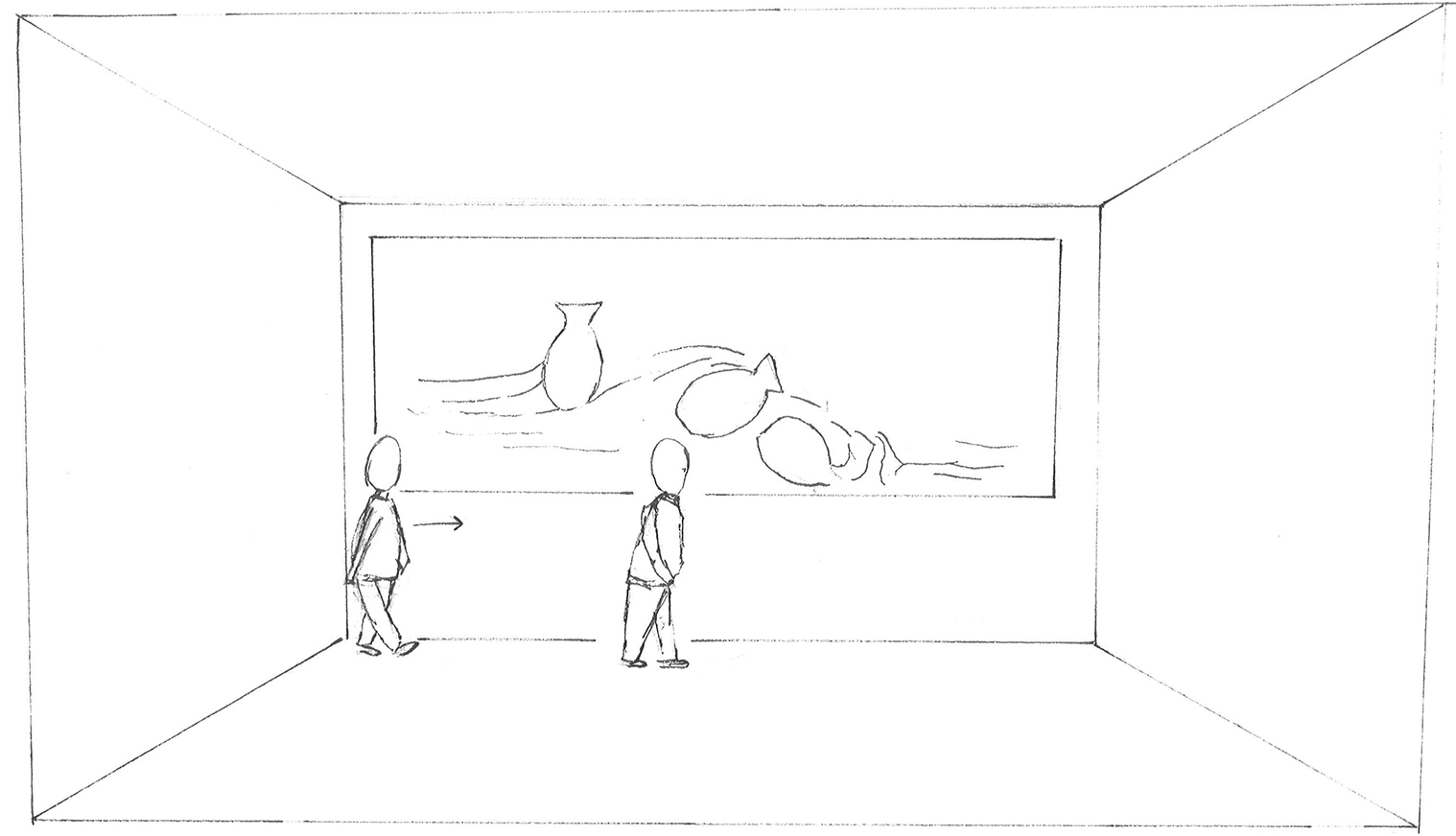

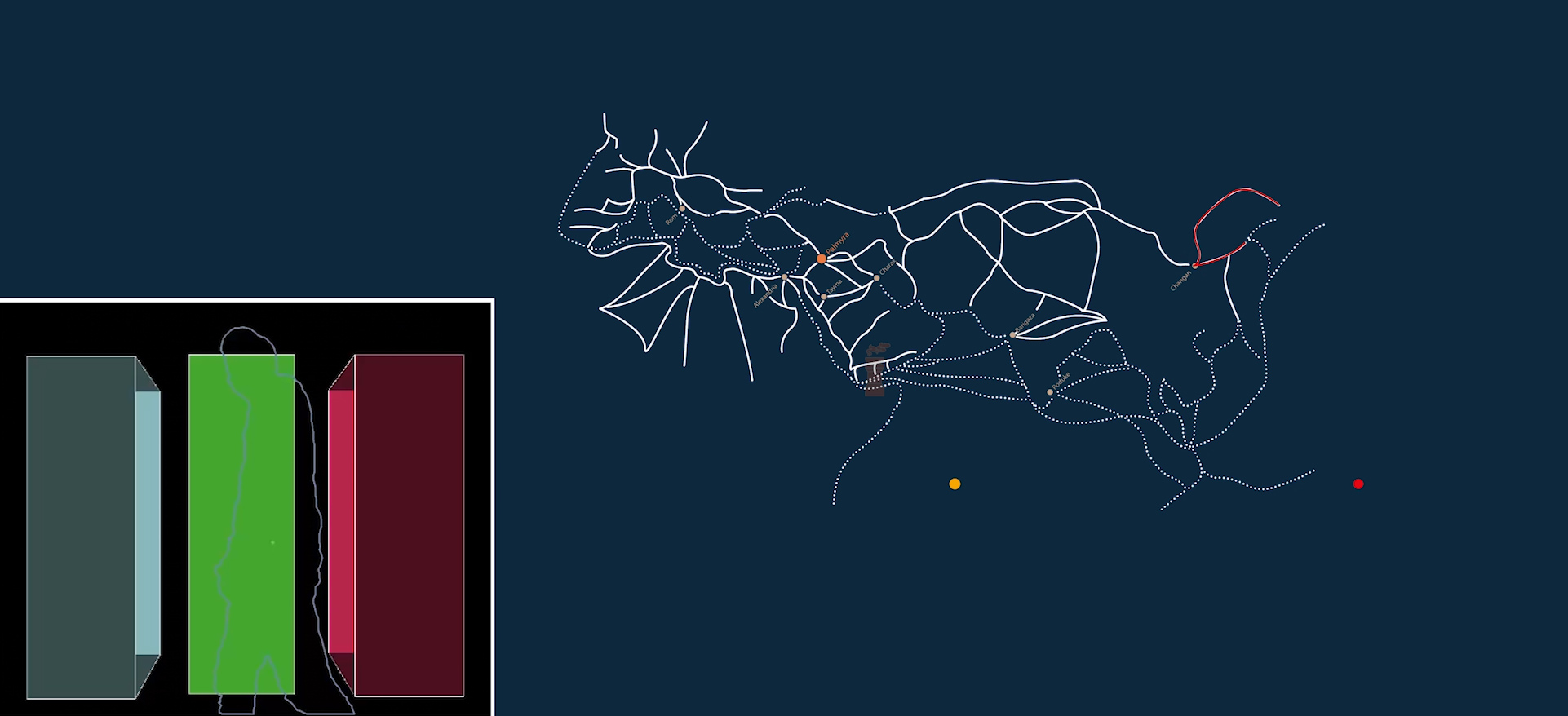

I was able to evaluate the tool in-the-wild by using it in the interactive installation „Bruch“ in the exhibition Tell Genderes at the Turm zur Katz Konstanz. In this installation, we controlled projected animations via the positions of persons in the room (tracked by Argus Vision). These projections showed virtual glass, mimicking an actual showcase, which was positioned in the middle of an exhibition room. The virtual glass either cracked or burst depending on the position and distance of visitors in relation to the projection.

I designed the room-spanning installation in an interdisciplinary team of designers and architects and implemented it. You can find more info on this project here.

Publications and Awards

The work on Argus Vision resulted in three publications. Besides the thesis itself, I was able to publish and present a short version of it at the Mensch und Computer 2018 conference in Dresden, Germany. For this paper, I received the best paper award at that conference. As it is custom, the recipient of the award is asked to publish an extended version of the paper in the i-com : Journal of Interactive Media. You can find the list of publications here.

Process

For Argus Vision, I cycled through the UX Lifecycle once. With the goal of developing an easy-to-use media control system that employs tracking technologies, I started with an analysis of the problem space, sketched possible solutions, implemented one of them, and evaluated the outcome.

Analyzing the Problem Space And Designing Solutions

Gathering requirements in this project was challenging, as I had no access to professional exhibition designers who I could interview or observe in their work. Moreover, as the target audience was exhibition designers without a technical background, it was questionable whether professional exhibition designers were the right people to interview in the first place.

Therefore, I went in two different directions. First, I interviewed a professional light designer based in Stuttgart, Germany about the state-of-the-art in media control. Here, the main takeaway was that there are many standards in the industry, like specific DMX controllers, but that these are challenging to work with and, thus, only directed at professionals from the field. Moreover, even in this sector, there was no tool or piece of hardware that allowed integrating tracking technology (apart from simple light sensors) to build interactive installations. This helped shaped the direction of the second step: I conducted an extensive literature review on scenography and exhibition design with a focus on DIY tracking tools. Unsurprisingly, there weren’t many (the only publicly available one is TSPS). Based on this interview, the first idea for the project was pretty simple – building a tool that allowed controlling lighting based on the user’s movement.

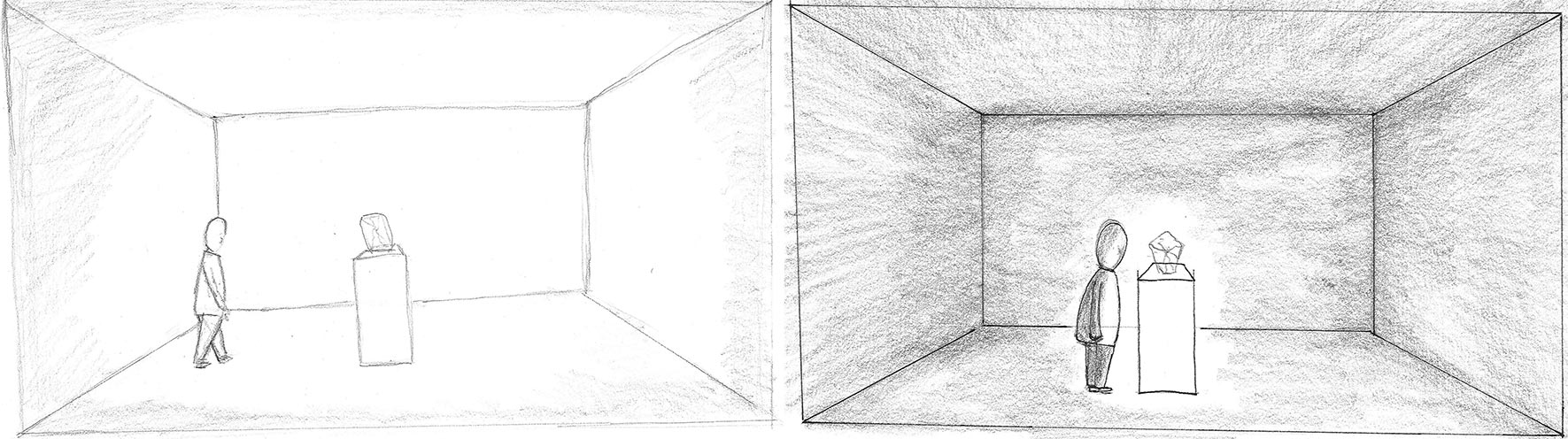

To design possible solutions for the problem space, I analyzed how current exhibitions use tracking technologies and what the capabilities of already existing tracking tools are. Based on this, I derived further requirements for the tool – for example that you should also be able to control animations.

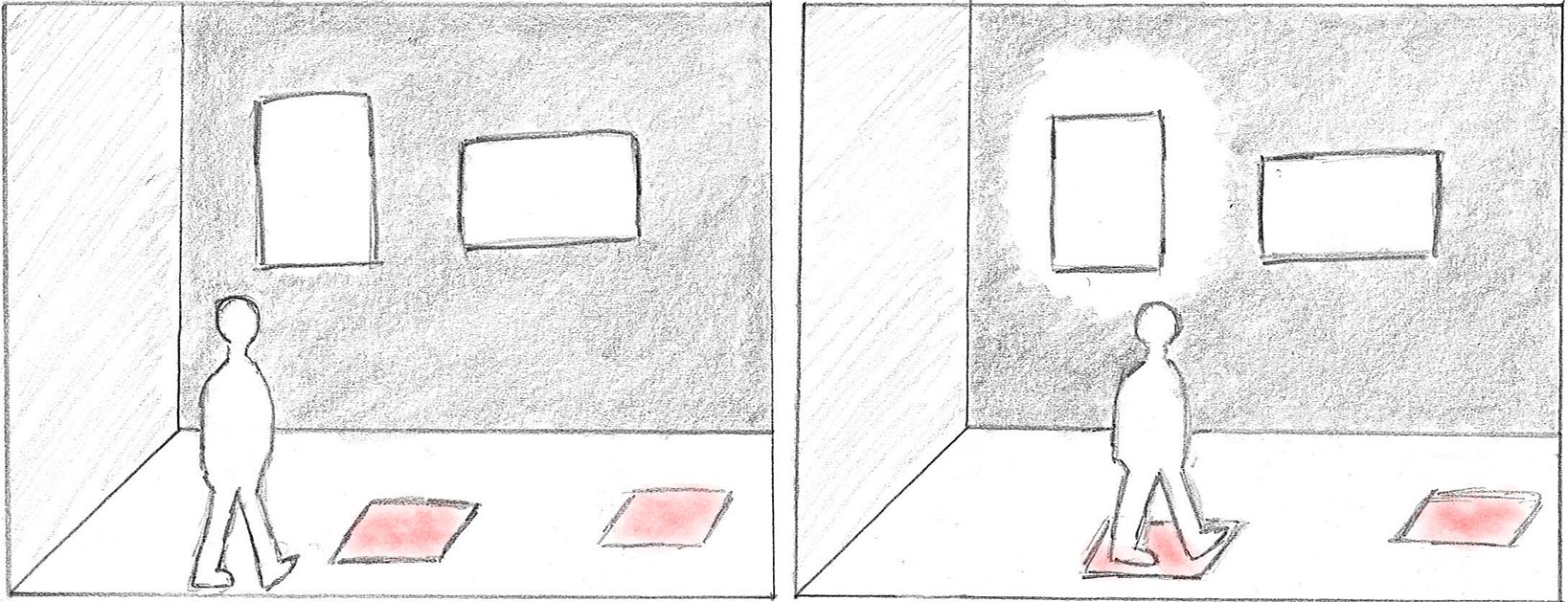

But most importantly, I noticed that many current installations do not really need extensive tracking setups with millimeter-precision, but that they often work like elaborate light switches: If a person enters a specific space or holds their hand in front of an object, something happens. This resulted in the concept of Triggerzones – virtual three-dimensional areas, which detect the presence of visitors.

Developing Argus Vision

I developed Argus Vision in a bottom-up style, focusing on each requirement from the analysis phase in isolation, before combining them in the final tool. For example, I first focused on the tracking fundamentals. Here, I implemented a modified background subtraction algorithm, where the current frame of a camera is compared to a previously saved background frame in order to find differences between the two. These differences are then analyzed using a contour tracing algorithm. Below you can see how this detection process works. It is the basis for all tracking features of Argus Vision.

Subsequently, I implemented the Triggerzone functionality, which greatly reduces the programming effort for exhibition designers. Triggerzones are virtual, quad shaped areas in a room, that detect the presence of visitors and therefore resemble light barriers in their behavior. After I had finished both the normal tracking and the Triggerzones, I made all features available in a UI. In the video below, you see both the UI and the Triggerzone functionality play together. The Triggerzones light up, when the tracked visitor enters them.

In a last step, I focused on how to make all this data available to possible clients. For this, I used the network protocol OSC, as the literature review showed that is the most widely supported protocol in applications such as Resolume. Below you see a video of how Argus Vision controls a projection in Resolume.

Results of the detection process of Argus Vision against an unsaved background (a), a saved background (b), and with activated contour-tracing (c).

Evaluating Argus Vision

I evaluated Argus Vision with two different methods. First, I used it in the interactive installation „Bruch“ to test its feasibility in a real exhibition. The installation operated maintenance-free for a period of six weeks. Find more information about the installation here.

Second, I conducted five expert interviews with exhibition designers from leading exhibition design companies in Germany. My interview partners were:

Each interview started with a warm up-phase, in which I asked questions about current projects, current trends in exhibition design, and about work processes of the exhibition designers. After that, I asked questions about Argus Vision and its functions before I concluded with questions about possible applications and possibilities for further development. The feedback of all interviewees was very positive regarding the application. Oschatz, for example, described Argus Vision as “one component in an ecosystem of different technologies used in exhibitions.” He continued that “if exhibition designers knew what they could do with it,” Argus Vision alone “enables sensational possibilities.”

You can read the interviews here.

Used Tools

Hardware

Microsoft Kinect 2

Software

Processing

Languages

Processing/Java

Publications

- Skowronski, M., Klinkhammer, D. & Reiterer, H. (2019). Argus Vision: A Tracking Tool for Exhibition Designers. i-com, 18(1), 41-53. https://doi.org/10.1515/icom-2019-0001

- Skowronski, M., Klinkhammer, D. & Reiterer, H. (2018). Argus Vision: A Tracking Tool for Exhibition Designers. In: Dachselt, R. & Weber, G. (Hrsg.), Mensch und Computer 2018 – Tagungsband. Bonn: Gesellschaft für Informatik e.V.. DOI: 10.18420/muc2018-mci-0291

- Skowronski, M. (2016). Argus Vision: Design und Evaluation eines Tracking Tools für Ausstellungsgestaltende. Master’s Thesis. Konstanz: University of Konstanz. https://bit.ly/3hJuwOn