Streaming Point Clouds In Real-Time Enabling Interactive Remote Museum Visits

Year

- 2021 – 2022

Tasks

- Research

- Design

- Development

How can visitors experience the social quality of a museum visit in pandemic times?

The NuForm research project strives to enhance hybrid museum experiences by integrating digital and physical elements, blurring the distinction between on-site and remote museum visits.

Working alongside the Museum für Naturkunde, we’ve spent two years developing prototypes that allow visitors to engage with exhibitions in new and interconnected ways.

The goal was to create a seamless environment where in-person and virtual visitors can interact naturally. Imagine examining an exhibit while easily conversing with someone viewing it from their home computer, sharing observations and insights as if you were standing together. These prototypes move beyond the concept of parallel digital and physical experiences. Instead, they integrate both aspects, allowing for richer interactions with the museum’s content and between visitors themselves.

This approach to museum visits could enhance accessibility, foster global connections, and provide new avenues for education and cultural exchange.

A rundown through the different prototypes we developed in the NuForm research project.

Results & Process

As you can see in the rundown video above, we developed various prototypes for this project that differed in the amount of agency they give to the user. In the 360 prototype, you – as a visitor – could look around freely and see where other people looked at. Yet you were still attached to the guide’s position as they dragged you through the exhibition, making it impossible to watch objects that may be of more interest to you.

With this drawback in mind, we explored the potential of 3D models of the museum, which would make it possible for you to walk through a fully virtual space. But this of course comes with other disadvantages. These 3D models are pre-scanned and thus are not representative of the museum in its current state – if someone changes something „in the real world“ or a person walks through the museum, you won’t see it in the 3D world.

From static to dynamic worlds

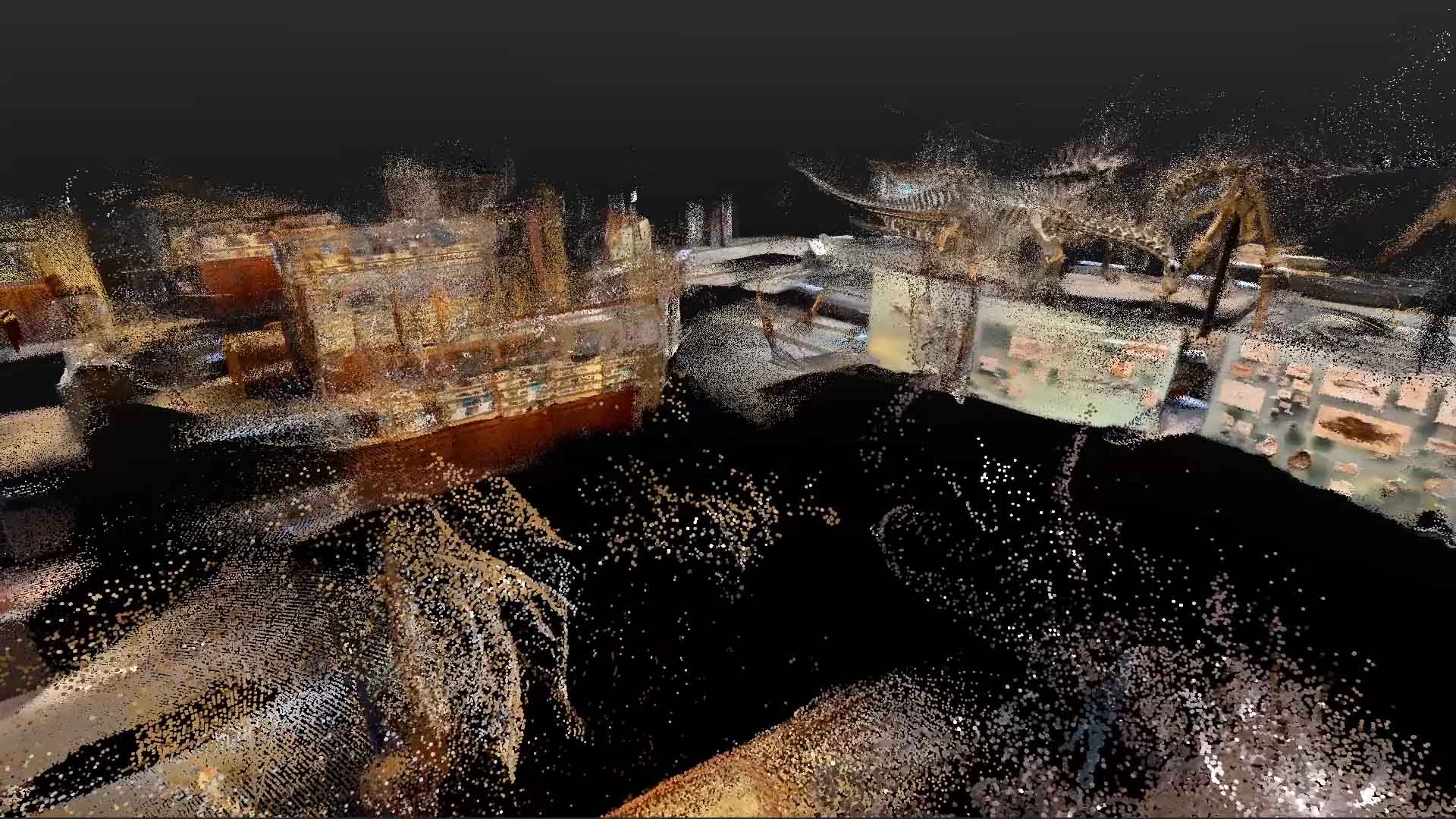

Using a scanned point cloud model of the „dinosaur room“ in the Museum für Naturkunde and some 360 video recordings we had of the same room, I quickly developed a first idea to overcome this: You as a visitor could walk freely through the scanned point cloud.

At various positions in the point cloud, bubbles that showed the 360 videos hovered in the air, which also emitted 3D audio of the sounds of the museum, drawing you to them. When you entered one of the bubbles, you were sucked into the 360 view and could look around freely, providing you with a (faked, because of privacy reasons ;)) realtime view into what is happening at the museum at this time.

A visitor moves from the point cloud to the 360 view.

Can’t we make it fully realtime?

This directly lead to the second, fully fledged prototype called Point Cloud Walk. We asked ourselves if it would be possible to not only stream 360 videos, but also the LIDAR data that modern iOS devices can record. This way, a guide could start their tour anywhere and scan the environment as they go, with visitors being able to walk around in these realtime recorded spaces.

Implementing this was a real challenge, because of the large datasets that had to be transferred in realtime and – if possible – via mobile data. I needed a way to store the position and color data of the point cloud as well as the position and orientation of the recording device in a format as small as possible and that – in the best case – also adapts to the user’s bandwidth, the same way WebRTC does it for allowing video conferences such as Teams or Zoom.

A visitor moves from the point cloud to the 360 view.

Lo and behold: I found the amazing Bibcam Plugin by Keijiro, which encodes all this data into a single videofile that can be decoded back to the original data later on. In theory, we could simply stream this video file to other users using the Janus WebRTC server that supports broadcasting and could then also make use of other WebRTC features like audio transmission to allow visitors to speak with each other. But of course, Unity does not support broadcasting!

In the end, I had to hack deep into Unity’s WebRTC implementation to integrate the broadcasting handshake with the Janus server. Implementing this took some time and was super stressful as it was quite uncertain whether it would even work. But that moment, when the same point cloud suddenly pops out on 10 different devices was definitely worth it! 🙂

Publications and Awards

We presented our work in presentations and workshops at various conferences such as the ICOM CECA 2022 in Moesgaard, Denmark or MuseumNext XR 2024.

Used Tools

Hardware

iPad, a 3d-printed "pilgrims staff", various microcontrollers

Software

Unity, Janus WebRTC Server

Languages

C#