A System To Study The Implications Of Using Augmented Reality For Co-Located And Remote Affinity Diagramming

Client

- University of Konstanz (Master’s Thesis)

Year

- 2021

Tasks

- Analysis

- Concept

- Implementation

- Pilot Study

Can Augmented Reality substitute physical creative collaboration?

Before COVID-19, designers around the world relied on pen and paper for most ideation work. The omnipresent post-its that fill up entire walls in design studio are the most visible example for this. But working with such materials comes with drawbacks. It is, for example, cumbersome or partly even impossible to document large Affinity Diagrams, as one has to transcribe each and every single post-it. With COVID-19, another striking disadvantage became apparent: You cannot collaborate remotely using physical material.

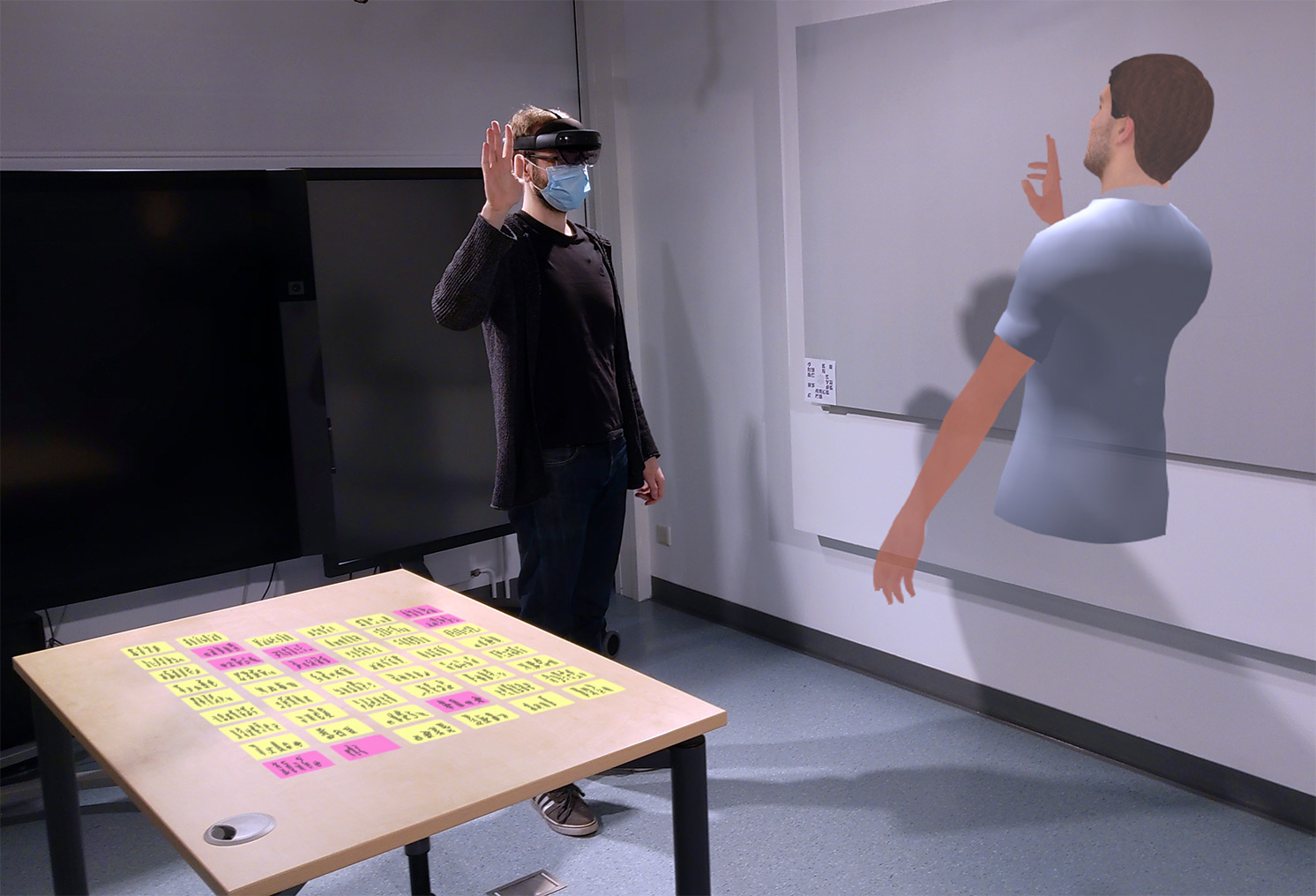

A technology that can potentially support such practices is AR. With it, designers can interact with virtual objects in the same way as with real ones, closely emulating working with physical notes. And, as tools like Spatial show, AR can enable natural remote collaboration by visualizing collaborators as virtual avatars that mimic their body and hand movements. But while this looks very promising, so far, there have been no studies that investigate what actually happens if one transforms analog work practices such as Affinity Diagramming to a digital one using AR.

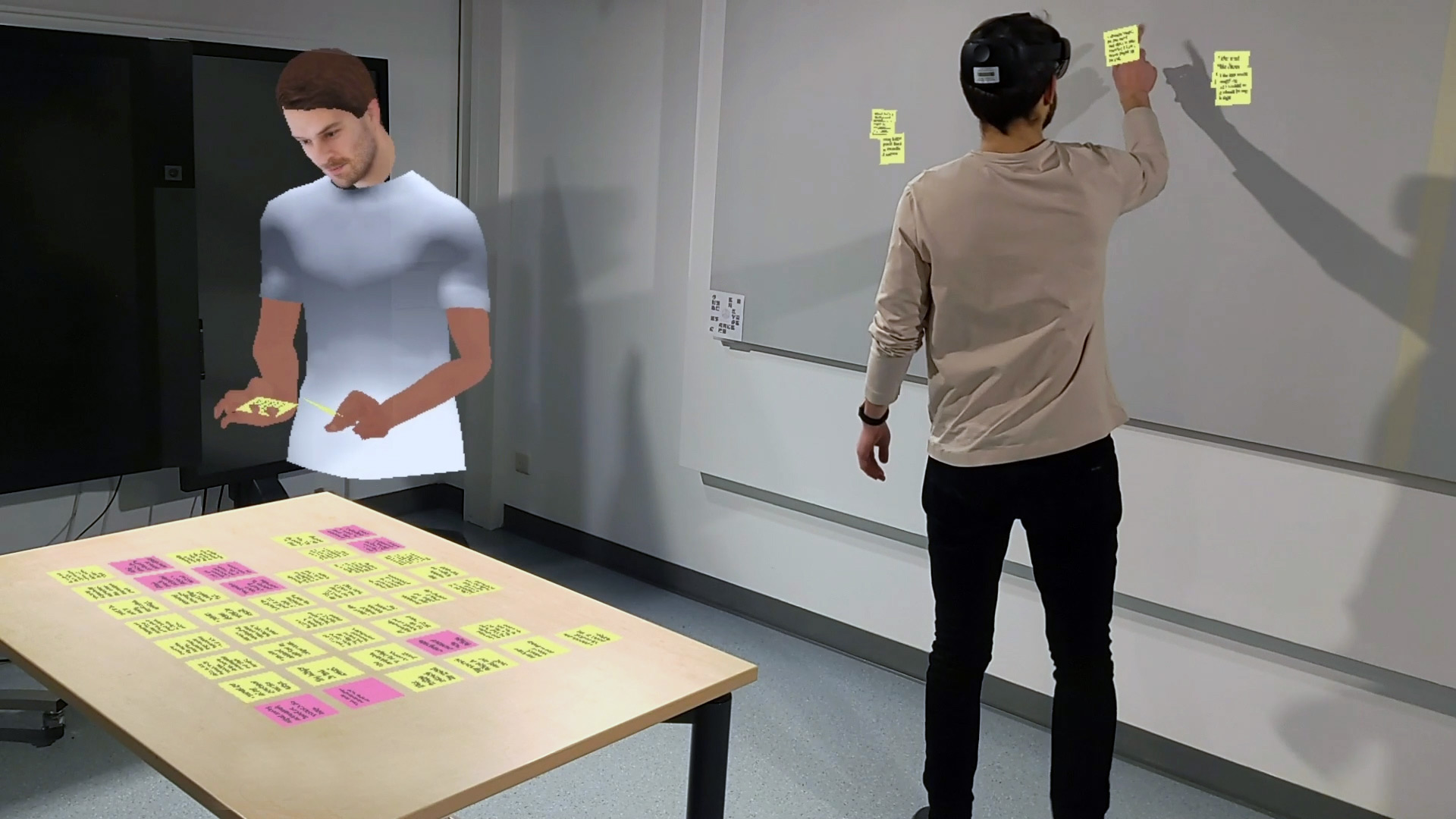

Because of this, I developed an AR application that closely mimics physical Affinity Diagramming and allows for remote collaboration using avatars. Using this application, I further designed two user studies that compare (i) the co-located physical practice to the co-located practice in AR and (ii) the co-located AR practice to its remote counterpart, providing a nuanced view of the benefits and challenges of using AR for creative work.

Augmented Affinity Diagramming allows users to perform Affinity Diagramming and other creativity methods in the same way they are used to without even being in the same room.

Results

As timely as this work was as COVID-19 made remote collaboration essential, at the same time, it prohibited me from conducting the studies as originally planned. Consequently, in my master thesis, my goal was to prepare them in way, that as soon as the health regulations allow it, a third party can conduct them. Because of this, I further developed the AR application and several study applications that help conducting the studies without difficulty. Moreover, I also presented the two studies in detail and reported the results of a pilot test for the remote collaboration condition, showing that the studies can be conducted as soon as the health regulations allow it.

Augmented Reality Affinity Diagramming

To have a fair comparison between AR Affinity Diagramming and Affinity Diagramming with physical post-its, my goal was to design the AR application to resemble physical Affinity Diagramming as close as possible. Therefore, the interaction with augmented sticky notes should mimic interaction with physical notes. Users could pick up and drop sticky notes on physical surface, pin sticky notes on top of each other or into the palm of their hands and could even let them fall to the floor.

Users can pick up notes with a grab gesture and place them on physical surfaces.

Users can pin notes on top of each other. If user moves a lower note, all notes that are pinned on top of it, move with it.

Users can pin notes in the palm of their hands. They remain there until the user picks them up again.

If a user releases the grab gesture, notes fall to the floor as if affected by gravity.

Remote Collaboration in Augmented Reality

Besides sending all necessary data between all devices over the network and other technical issues that I discuss in the Process section, the main goal was to visualize users as 3D avatars that look like the users and also mimic their body movement. While the face of the avatar was calculated from a user’s portrait photo using Avatar SDK, the avatar bodies were pre-constructed. The avatar moves and rotates the same way as the users and also mimics their hand and even finger movements. Finally, the mouth of the avatars move when the users speak.

Tools for the study conduction

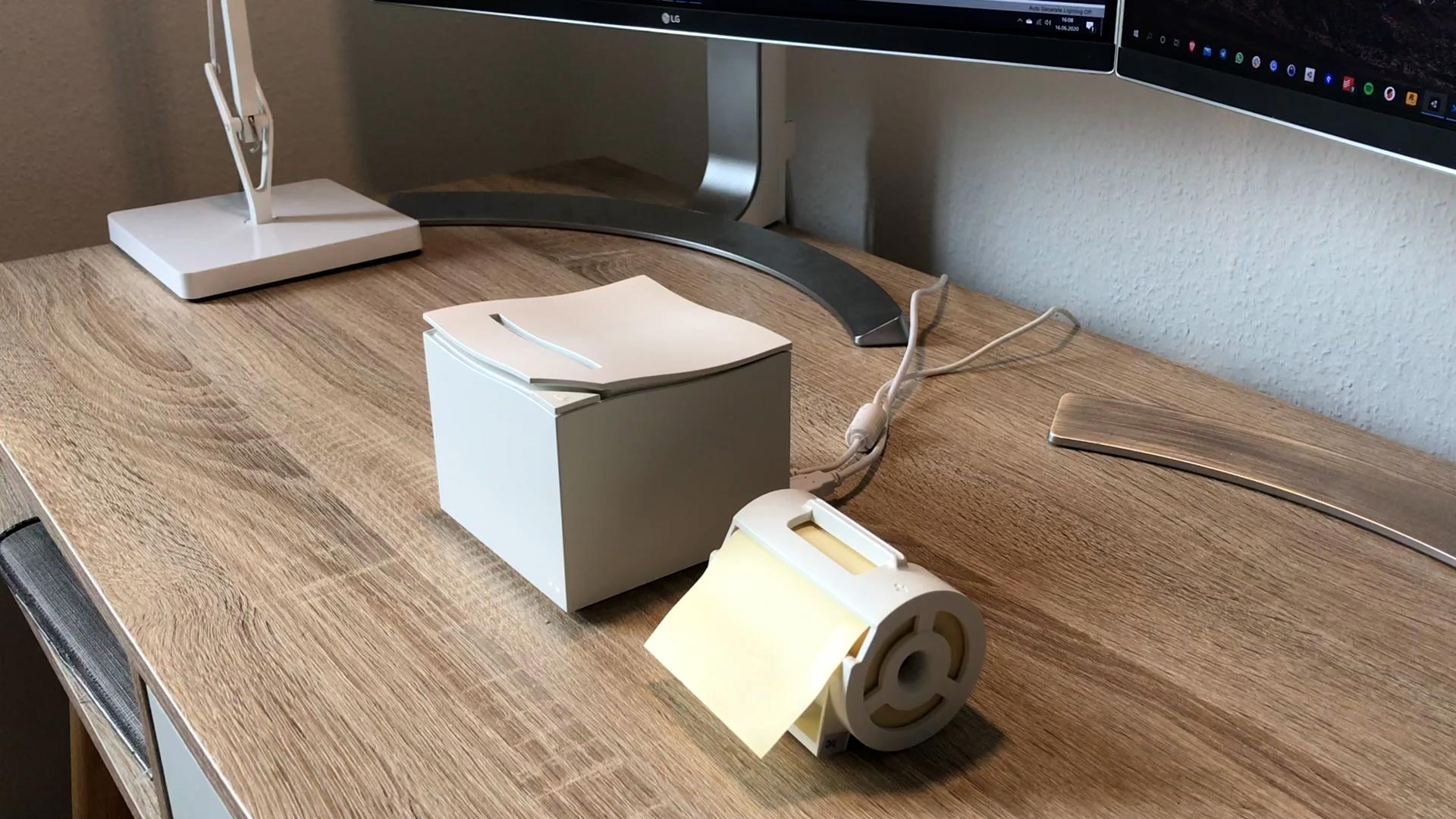

As I could not conduct the studies myself, I had to make sure that a third party can conduct them. To this end, I created various applications that make the study conduction easy. While some applications were created to help during the study procedure, others were created to make it easy to setup the study. For example, as I wanted to compare real word physical Affinity Diagramming with AR Affinity Diagramming, I needed the augmented post-its to look the same way as physical ones. For this, I created an application that took screenshots of the augmented post-its and printed them out on a post-it printer.

I also created an iPad application, which all study participants receive at the study’s beginning. Here, they fill out all questionnaires of the study and receive tutorials on Affinity Diagramming in AR. This ensures that all participants go through the same process and, therefore, makes the study easier for both study conductor and participants.

An example from the iPad application: Tutorial videos teach the participants how to interact with the augmented post-its.

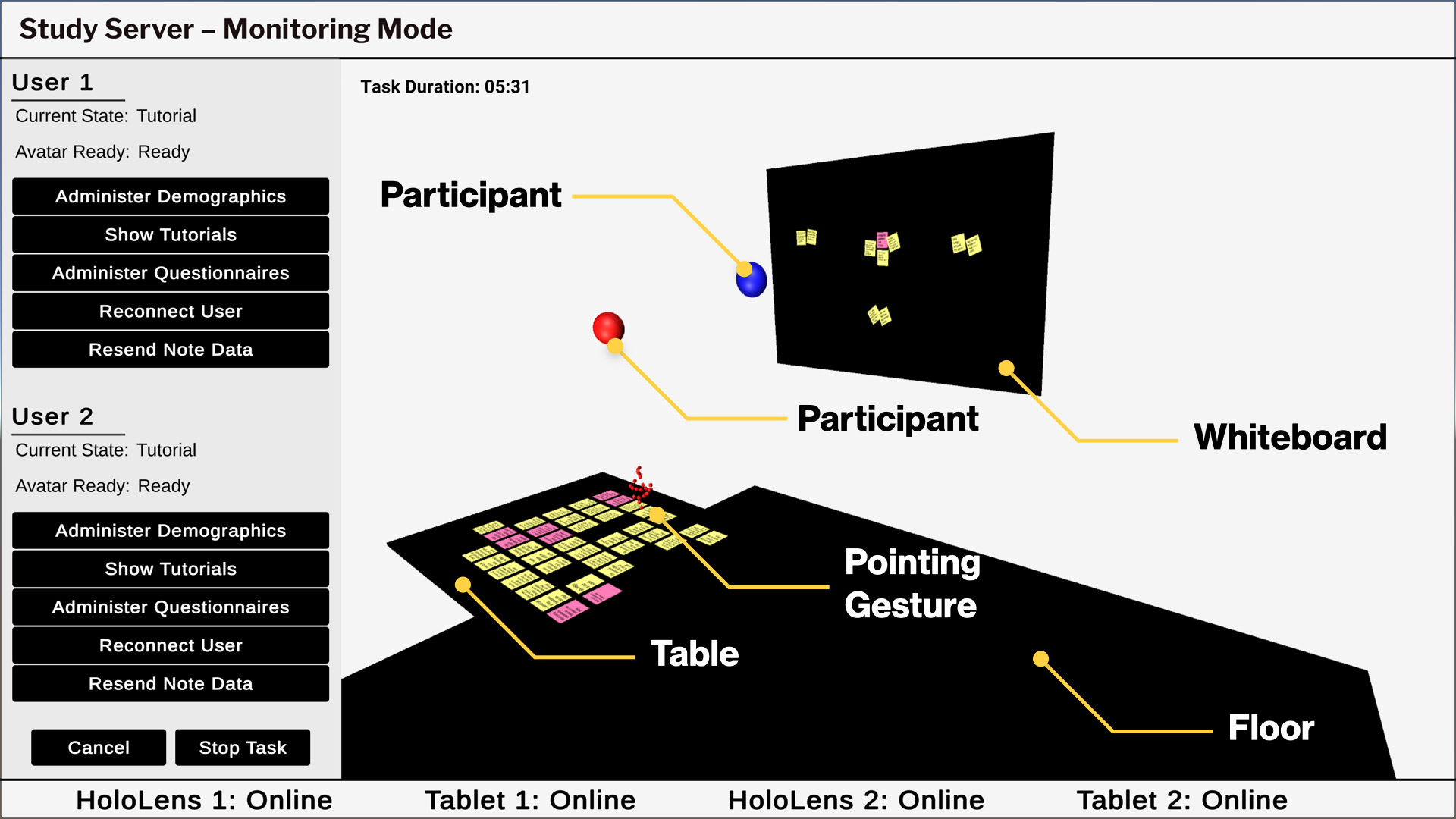

Finally, I implemented a desktop application for the study conductor. Here, the study conductor can control the study procedure. For example, he or she can administer questionnaires to the participant’s tablets or reconnect devices in case of an error. However, the most important feature is that the study conductor can observe what the participants are doing in AR, which would otherwise be impossible as the conductor does not wear an AR headset.

Process

While my bachelor’s thesis was strongly design-oriented, I wanted to focus more on UX research in my master thesis. Inspired by a presentation about the usage of post-its in design environments and how designers still prefer these physical artifacts over working digitally, I set out to answer the question of why that is, identified AR as a potential technology to best support design work, and designed a study and prototype to investigate this assessment.

Mapping the research field

My research was based on two key observations: First, reading about design and creativity methods, I noticed that designers almost exclusively use physical tools during ideation. For all other work, designers today use digital tools. This is nicely reflected in this survey among UX professionals from 2019. The other observation was the rise of remote, spatial, collaboration tools like Spatial.

It struck me that the Spatial video actually shows things designers do during ideation, so exactly the things they would normally use physical tools for. And this is what brought me to the idea of investigating what actually happens if one uses AR for creativity methods. Investigating AR as a potential technology for creative collaboration proved to be a challenging task, as I had to analyze and connect various research strains on design, creativity, computer-supported cooperative work, and AR. However, building these theoretical foundations enabled me to more easily manage the development and study design later on.

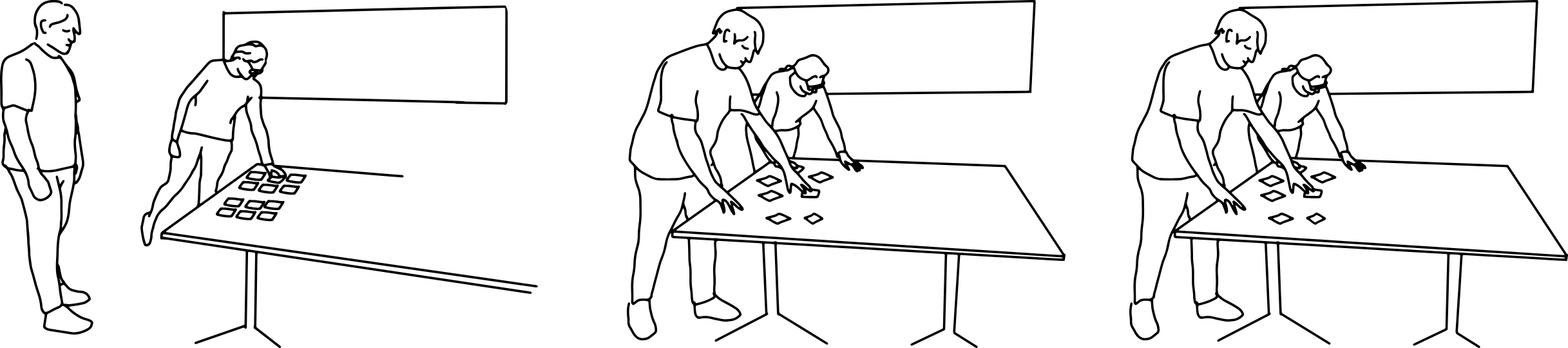

Designing the Study

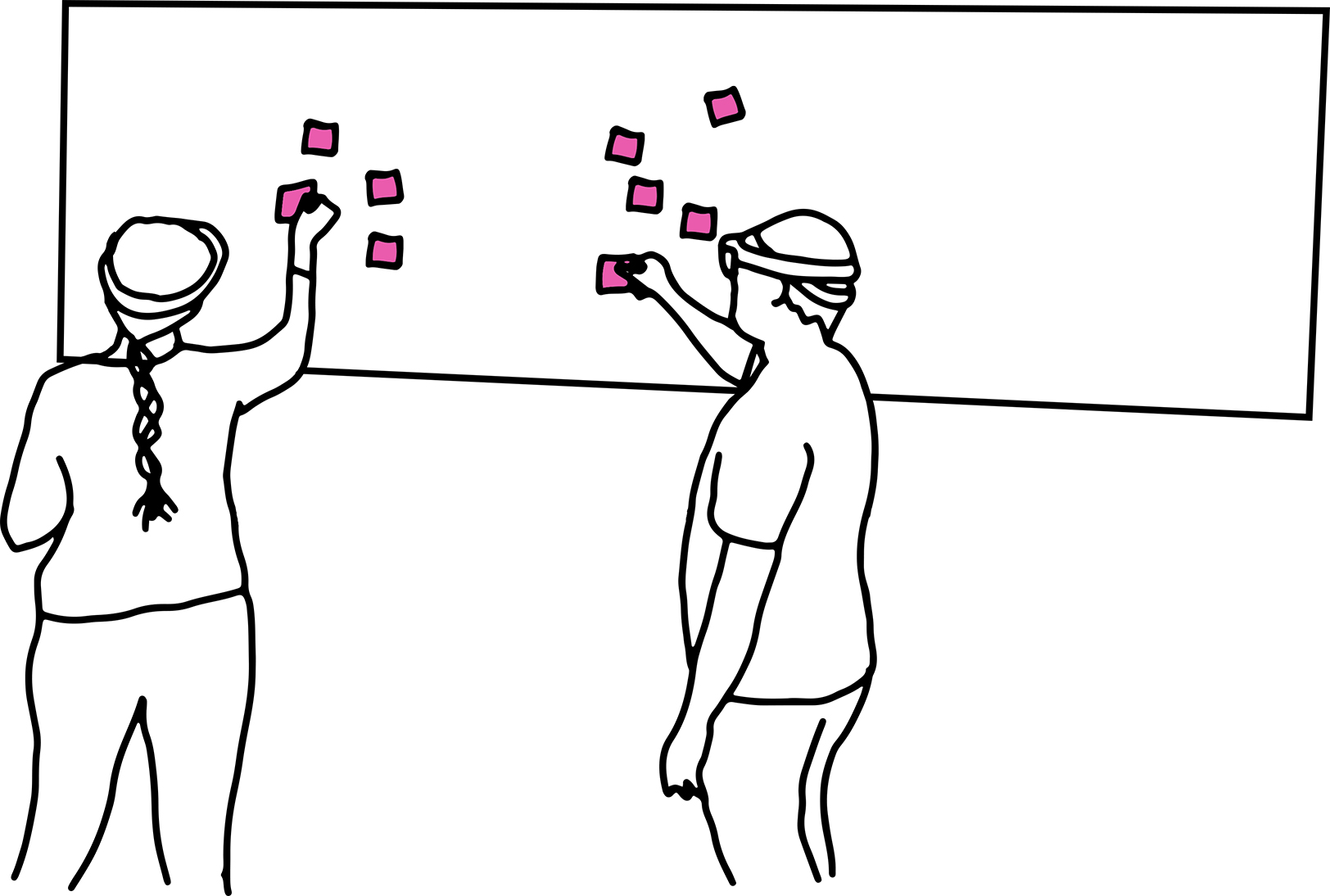

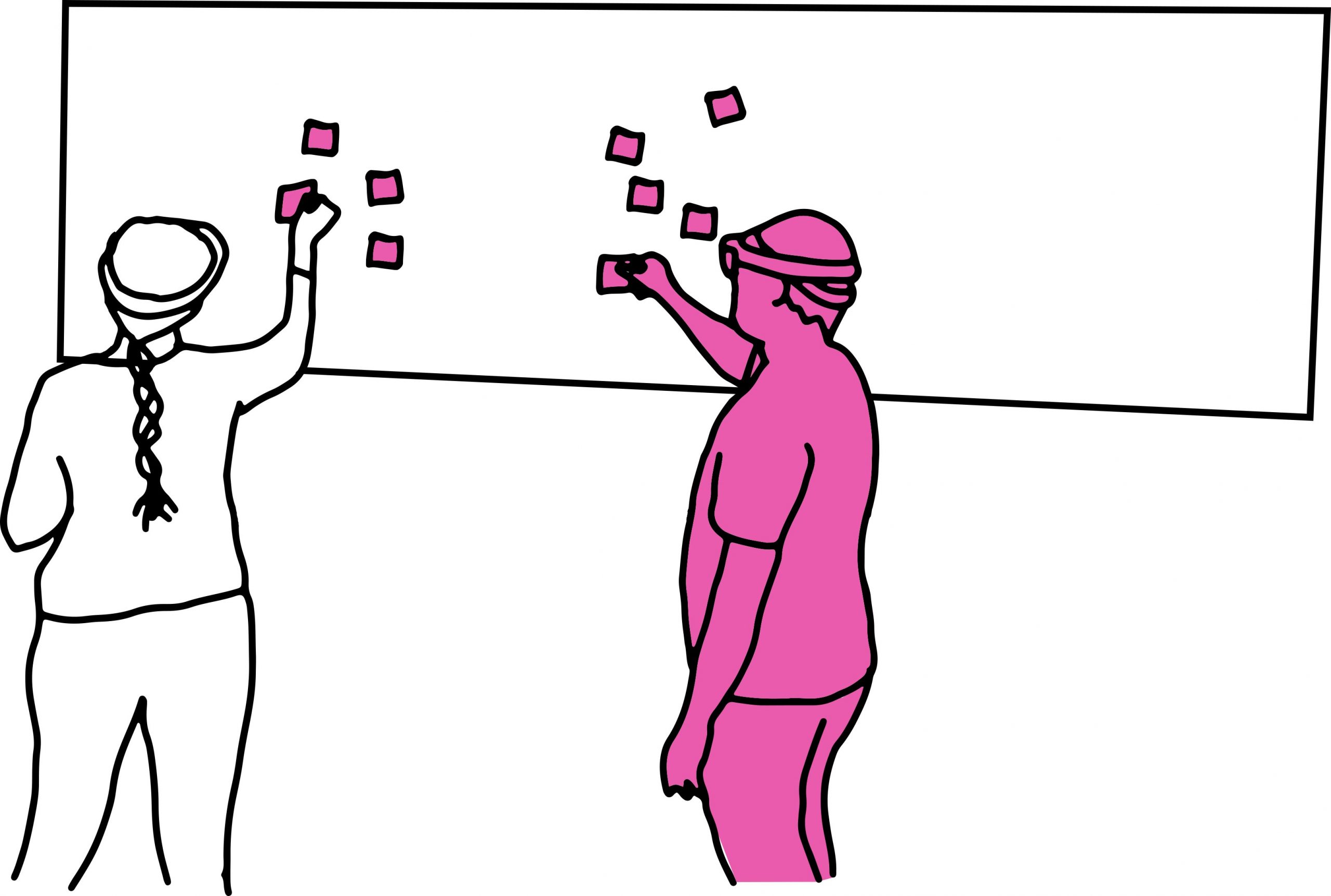

Taking previous studies on the effects of technology on (creative) collaboration as an inspiration, I designed two studies that investigate (i) the effect of using AR for co-located Affinity Diagramming and (ii) the effect of using AR for remote Affinity Diagramming.

As the health regulations for the COVID-19 pandemic did not allow conducting such studies at that time, I defined instructions in detail on setting up, conducting, and evaluating these studies. Using these instructions, a third party can conduct them as soon as the health regulations allow it. For example, I not only defined the studies’ overall research objectives and questions, but also discussed the studies’ expected findings based on the theoretical foundations and related work.

Developing the Study Prototype

Defining the study design in such detail also enabled me to clearly define the requirements for the study prototype and, therefore, be quicker in developing it. Nevertheless, it was definitely the most challenging project I ever had to develop. Besides the technical aspects, developing reality-based interactions proved to be the most demanding work. Below, I want to describe one example of the iterative process: Implementing augmented Post-its that feel natural. Here, I iterated through different versions of augmented Post-its, both regarding interaction and their appearance.

The first version I developed was a simple rectangle or quad, which could have three different colors and had some 3D text on top of it. Additionally, I implemented, what I called „Placement Projections“, which showed where the Post-it could be pinned when the user interacted with it.

The first Post-it iteration was a quad-shaped area with text on it and a black "Placement Projection", which showed where the Post-it would be pinned when the user interacted with it.

Another thing that I implemented was the functionality to put multiple post-its in your hand’s palm and transport them. This idea was based on literature research. Here, the first idea was to use a building block by the Mixed Reality Toolkit called Grid Object collection. This automatically laid out the post-its in a user-defined grid.

The Post-It stack was snapped to the users hands. If the user would place a Post-it on the stack, it would automatically be aligned to a vertical grid and rotated slightly to give it a more natural feel.

But this implementation resulted in a lot of issues. The first one was that you could only ever see what was on top of the stack and not more. Moreover, I had to implement the stack in a way that one could only ever take the uppermost post-it from the stack, because the HoloLens 2 tracking was not precise enough to take, for example, the second post-it from the bottom. But the issue that got me to rewrite this all together was that I ended up at a point where I thought „If one can stack the post-its on their hands, why can’t one stack post-its anywhere else?“ Because in the analog, real world, it is pretty common to pin post-its onto each other, either to save space or to move them together.

So, I changed the post-its to be closer to their 3D real-world counterparts. For this, I designed a post-it that consisted of two parts: An adhesive strip and a text area. Both quads were in a slight angle to each other, mimicking physical post-its. In this implementation, only the adhesive strip-part of the post-it could stick to surfaces – and to other post-its. Adding the possibility to pin post-its to each other also made the application more consistent, because now the users could use the same interaction for posting post-its in their hand or on other post-its.

In another iteration, Post-Its consisted of an adhesive strip and the text area. The text area was slightly bent, giving the Post-It a more natural 3D feel.

Later I added even more reality-based features, like Post-its falling to the floor as if affected by gravity. But I think this example shows how difficult it is to build interactions based on the real world. The possibilities are endless and there is always something you miss.

Pilot Testing and Future Work

I was able to do a pilot test with 6 participants to verify whether all data is measured correctly and whether the planned user studies can be successfully conducted. Here, everything went without issues and the pilot test results were very promising. While it – of course – could not substitute conducting the studies myself, it is planned that the studies will be conducted at a later point and hopefully published at a larger conference for human-computer interaction. Fingers crossed!

Used Tools

Hardware

Microsoft HoloLens 2, Apple iPad 2 Pro

Software

Unity, MRTK, Avatar SDK, SALSA LipSync

Languages

C#, Typescript

Publications

- Skowronski, M. (2021).: Collaborative Embodied Creativity Methods in Augmented Reality: A System to Study the Implications of Using Augmented Reality for Co-Located and Remote Affinity Diagramming. Master’s Thesis. Konstanz: University of Konstanz.